Matrix Addition, Scalar Multiplication, and Transposition

A rectangular array of numbers is called a matrix (the plural is matrices), and the numbers are called the entries of the matrix. Matrices are usually denoted by uppercase letters: ,

,

, and so on. Hence,

are matrices. Clearly matrices come in various shapes depending on the number of rows and columns. For example, the matrix shown has

rows and

columns. In general, a matrix with

rows and

columns is referred to as an

matrix or as having size

. Thus matrices

,

, and

above have sizes

,

, and

, respectively. A matrix of size

is called a row matrix, whereas one of size

is called a column matrix. Matrices of size

for some

are called square matrices.

Each entry of a matrix is identified by the row and column in which

it lies. The rows are numbered from the top down, and the columns are

numbered from left to right. Then the -entry of a matrix is the number lying simultaneously in row

and column

. For example,

A special notation is commonly used for the entries of a matrix. If is an

matrix, and if the

-entry of

is denoted as

, then

is displayed as follows:

This is usually denoted simply as . Thus

is the entry in row

and column

of

. For example, a

matrix in this notation is written

It is worth pointing out a convention regarding rows and columns: Rows are mentioned before columns. For example:

- If a matrix has size

, it has

rows and

columns.

- If we speak of the

-entry of a matrix, it lies in row

and column

.

- If an entry is denoted

, the first subscript

refers to the row and the second subscript

to the column in which

lies.

Two points and

in the plane are equal if and only if they have the same coordinates, that is

and

. Similarly, two matrices

and

are called equal (written

) if and only if:

- They have the same size.

- Corresponding entries are equal.

If the entries of and

are written in the form

,

, described earlier, then the second condition takes the following form:

Example :

Given ,

and

discuss the possibility that ,

,

.

Solution:

is impossible because

and

are of different sizes:

is

whereas

is

. Similarly,

is impossible. But

is possible provided that corresponding entries are equal:

means ,

,

, and

.

Matrix Addition

If and

, this takes the form

Note that addition is not defined for matrices of different sizes.

Example 2 :

If

and ,

compute .

Solution:

Example 3 :

Find ,

, and

if

.

Solution:

Add the matrices on the left side to obtain

Because corresponding entries must be equal, this gives three equations: ,

, and

. Solving these yields

,

,

.

If ,

, and

are any matrices of the same size, then

In fact, if

The associative law is verified similarly.

The matrix in which every entry is zero is called the

zero matrix and is denoted as

(or

if it is important to emphasize the size). Hence,

holds for all matrices

. The negative of an

matrix

(written

) is defined to be the

matrix obtained by multiplying each entry of

by

. If

, this becomes

. Hence,

holds for all matrices where, of course,

is the zero matrix of the same size as

.

A closely related notion is that of subtracting matrices. If and

are two

matrices, their difference

is defined by

Note that if and

, then

is the matrix formed by subtracting corresponding entries.

Example 4 :

Solution:

Example 5 :Solve

where

We solve a numerical equation by subtracting the number

from both sides to obtain

. This also works for matrices. To solve

simply subtract the matrix

from both sides to get

The reader should verify that this matrix does indeed satisfy the original equation.

The solution in Example 2.1.5 solves the single matrix equation directly via matrix subtraction:

. This ability to work with matrices as entities lies at the heart of matrix algebra.

It is important to note that the sizes of matrices involved in some calculations are often determined by the context. For example, if

then and

must be the same size (so that

makes sense), and that size must be

(so that the sum is

). For simplicity we shall often omit reference to such facts when they are clear from the context.

Scalar Multiplication

In gaussian elimination, multiplying a row of a matrix by a number means multiplying every entry of that row by

.

More generally, if is any matrix and

is any number, the scalar multiple

is the matrix obtained from

by multiplying each entry of

by

.

The term scalar arises here because the set of numbers from which the entries are drawn is usually referred to as the set of scalars. We have been using real numbers as scalars, but we could equally well have been using complex numbers.

Example 1 :

If

and

compute ,

, and

.

Solution:

If is any matrix, note that

is the same size as

for all scalars

. We also have

because the zero matrix has every entry zero. In other words, if either

or

. The converse of this statement is also true, as Example 2.1.7 shows.

Example 1 :

If , show that either

or

.

Solution:

Write so that

means

for all

and

. If

, there is nothing to do. If

, then

implies that

for all

and

; that is,

.

Theorem

Let ,

, and

denote arbitrary

matrices where

and

are fixed. Let

and

denote arbitrary real numbers. Then

-

.

.

- There is an

matrix

, such that

for each

.

- For each

there is an

matrix,

, such that

.

-

.

-

.

-

.

-

.

Proof:

Properties 1–4 were given previously. To check Property 5, let and

denote matrices of the same size. Then

, as before, so the

-entry of

is

But this is just the -entry of

, and it follows that

. The other Properties can be similarly verified; the details are left to the reader.

The Properties in Theorem 2.1.1 enable us to do calculations with matrices in much the same way that

numerical calculations are carried out. To begin, Property 2 implies that the sum

is the same no matter how it is formed and so is written as . Similarly, the sum

is independent of how it is formed; for example, it equals both and

. Furthermore, property 1 ensures that, for example,

In other words, the order in which the matrices are added does not matter. A similar remark applies to sums of five (or more) matrices.

Properties 5 and 6 in Theorem 2.1.1 are called distributive laws for scalar multiplication, and they extend to sums of more than two terms. For example,

Similar observations hold for more than three summands. These facts, together with properties 7 and 8, enable us to simplify expressions by collecting like terms, expanding, and taking common factors in exactly the same way that algebraic expressions involving variables and real numbers are manipulated. The following example illustrates these techniques.

Example 1 :

Simplify where

and

are all matrices of the same size.

Solution:

The reduction proceeds as though ,

, and

were variables.

Transpose of a Matrix

Many results about a matrix involve the rows of

, and the corresponding result for columns is derived in an analogous way, essentially by replacing the word row by the word column throughout. The following definition is made with such applications in mind.

If is an

matrix, the transpose of

, written

, is the

matrix whose rows are just the columns of

in the same order.

In other words, the first row of is the first column of

(that is it consists of the entries of column 1 in order). Similarly the second row of

is the second column of

, and so on.

Solution:

If is a matrix, write

. Then

is the

th element of the

th row of

and so is the

th element of the

th column of

. This means

, so the definition of

can be stated as follows:

This is useful in verifying the following properties of transposition.

Theorem :

Let and

denote matrices of the same size, and let

denote a scalar.

- If

is an

matrix, then

is an

matrix.

.

-

.

.

Proof:

Property 1 is part of the definition of , and Property 2 follows from (2.1). As to Property 3: If

, then

, so (2.1) gives

Finally, if , then

where

Then (2.1) gives Property 4:

There is another useful way to think of transposition. If is an

matrix, the elements

are called the main diagonal of

. Hence the main diagonal extends down and to the right from the upper left corner of the matrix

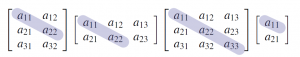

; it is shaded in the following examples:

Thus forming the transpose of a matrix can be viewed as “flipping”

about its main diagonal, or as “rotating”

through

about the line containing the main diagonal. This makes Property 2 in Theorem~?? transparent.

Example :

Solve for if

.

Solution:

Using Theorem 2.1.2, the left side of the equation is

Hence the equation becomes

Thus

, so finally

.

Note that Example 2.1.10 can also be solved by first transposing both sides, then solving for , and so obtaining

. The reader should do this.

The matrix ![]() in Example 2.1.9 has the property that

in Example 2.1.9 has the property that ![]() . Such matrices are important; a matrix

. Such matrices are important; a matrix ![]() is called symmetric if

is called symmetric if ![]() . A symmetric matrix

. A symmetric matrix ![]() is necessarily square (if

is necessarily square (if ![]() is

is ![]() , then

, then ![]() is

is ![]() , so

, so ![]() forces

forces ![]() ).

The name comes from the fact that these matrices exhibit a symmetry

about the main diagonal. That is, entries that are directly across the

main diagonal from each other are equal.

).

The name comes from the fact that these matrices exhibit a symmetry

about the main diagonal. That is, entries that are directly across the

main diagonal from each other are equal.

For example, ![Rendered by QuickLaTeX.com \left[ \begin{array}{ccc} a & b & c \\ b^\prime & d & e \\ c^\prime & e^\prime & f \end{array} \right]](https://ecampusontario.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-c874e4fc13043e1eb1536b3d3b88b25f_l3.png) is symmetric when

is symmetric when ![]() ,

, ![]() , and

, and ![]() .

.

Example :

If and

are symmetric

matrices, show that

is symmetric.

Solution:

We have and

, so, by Theorem 2.1.2, we have

. Hence

is symmetric.

Example :

Suppose a square matrix satisfies

. Show that necessarily

.

Solution:

If we iterate the given equation, Theorem 2.1.2 gives

Subtracting from both sides gives

, so

.

Matrix-Vector Multiplication

Up to now we have used matrices to solve systems of linear equations by manipulating the rows of the augmented matrix. In this section we introduce a different way of describing linear systems that makes more use of the coefficient matrix of the system and leads to a useful way of “multiplying” matrices.

Vectors

It is a well-known fact in analytic geometry that two points in the plane with coordinates and

are equal if and only if

and

. Moreover, a similar condition applies to points

in space. We extend this idea as follows.

An ordered sequence of real numbers is called an ordered

–tuple. The word “ordered” here reflects our insistence that two ordered

-tuples are equal if and only if corresponding entries are the same. In other words,

Thus the ordered -tuples and

-tuples are just the ordered pairs and triples familiar from geometry.

Let denote the set of all real numbers. The set of all ordered

-tuples from

has a special notation:

There are two commonly used ways to denote the -tuples in

: As rows

or columns

;

the notation we use depends on the context. In any event they are called vectors or –vectors and will be denoted using bold type such as x or v. For example, an

matrix

will be written as a row of columns:

If and

are two

-vectors in

, it is clear that their matrix sum

is also in

as is the scalar multiple

for any real number

. We express this observation by saying that

is closed under addition and scalar multiplication. In particular, all the basic properties in Theorem 2.1.1 are true of these

-vectors. These properties are fundamental and will be used frequently below without comment. As for matrices in general, the

zero matrix is called the zero

–vector in

and, if

is an

-vector, the

-vector

is called the negative

.

Of course, we have already encountered these -vectors in Section 1.3 as the solutions to systems of linear equations with

variables. In particular we defined the notion of a linear combination

of vectors and showed that a linear combination of solutions to a

homogeneous system is again a solution. Clearly, a linear combination of

-vectors in

is again in

, a fact that we will be using.

Matrix-Vector Multiplication

Given a system of linear equations, the left sides of the equations depend only on the coefficient matrix and the column

of variables, and not on the constants. This observation leads to a

fundamental idea in linear algebra: We view the left sides of the

equations as the “product”

of the matrix

and the vector

.

This simple change of perspective leads to a completely new way of

viewing linear systems—one that is very useful and will occupy our

attention throughout this book.

To motivate the definition of the “product” , consider first the following system of two equations in three variables:

(2.2)

and let ,

,

denote the coefficient matrix, the variable matrix, and the constant

matrix, respectively. The system (2.2) can be expressed as a single

vector equation

which in turn can be written as follows:

Now observe that the vectors appearing on the left side are just the columns

of the coefficient matrix . Hence the system (2.2) takes the form

(2.3)

This shows that the system (2.2) has a solution if and only if the constant matrix is a linear combination of the columns of

, and that in this case the entries of the solution are the coefficients

,

, and

in this linear combination.

Moreover, this holds in general. If is any

matrix, it is often convenient to view

as a row of columns. That is, if

are the columns of

, we write

and say that is given in terms of its columns.

Now consider any system of linear equations with coefficient matrix

. If

is the constant matrix of the system, and if

is the matrix of variables then, exactly as above, the system can be written as a single vector equation

(2.4)

Write the system

in the form given in (2.4).

Solution:

As mentioned above, we view the left side of (2.4) as the product of the matrix and the vector

. This basic idea is formalized in the following definition:

Let be an

matrix, written in terms of its columns

. If

is any n-vector, the product is defined to be the

-vector given by:

In other words, if is

and

is an

-vector, the product

is the linear combination of the columns of

where the coefficients are the entries of

(in order).

Note that if is an

matrix, the product

is only defined if

is an

-vector and then the vector

is an

-vector because this is true of each column

of

. But in this case the system of linear equations with coefficient matrix

and constant vector

takes the form of a single matrix equation

The following theorem combines Definition 2.5 and equation (2.4) and summarizes the above discussion. Recall that a system of linear equations is said to be consistent if it has at least one solution.

Theorem :

- Every system of linear equations has the form

where

is the coefficient matrix,

is the constant matrix, and

is the matrix of variables.

- The system

is consistent if and only if

is a linear combination of the columns of

.

- If

are the columns of

and if

, then

is a solution to the linear system

if and only if

are a solution of the vector equation

A system of linear equations in the form as in (1) of Theorem 2.2.1 is said to be written in matrix form. This is a useful way to view linear systems as we shall see.

Theorem 2.2.1 transforms the problem of solving the linear system into the problem of expressing the constant matrix

as a linear combination of the columns of the coefficient matrix

.

Such a change in perspective is very useful because one approach or the

other may be better in a particular situation; the importance of the

theorem is that there is a choice.

Example :

If and

, compute

.

Solution:

By Definition 2.5:

.

Example :

Given columns ,

,

, and

in

, write

in the form

where

is a matrix and

is a vector.

Solution:

Here the column of coefficients is

Hence Definition 2.5 gives

where is the matrix with

,

,

, and

as its columns.

Let be the

matrix given in terms of its columns

,

,

, and

.

In each case below, either express as a linear combination of

,

,

, and

, or show that it is not such a linear combination. Explain what your answer means for the corresponding system

of linear equations.

1.

2.

Solution:

By Theorem 2.2.1, is a linear combination of

,

,

, and

if and only if the system

is consistent (that is, it has a solution). So in each case we carry the augmented matrix

of the system

to reduced form.

1. Here

, so the system

has no solution in this case. Hence

is \textit{not} a linear combination of

,

,

, and

.

2. Now

, so the system

is consistent.

Thus is a linear combination of

,

,

, and

in this case. In fact the general solution is

,

,

, and

where

and

are arbitrary parameters. Hence

for any choice of and

. If we take

and

, this becomes

, whereas taking

gives

.

Taking to be the zero matrix, we have

for all vectors

by Definition 2.5 because every column of the zero matrix is zero. Similarly,

for all matrices

because every entry of the zero vector is zero.

Example :

If , show that

for any vector

in

.

Solution:

If then Definition 2.5 gives

The matrix in Example 2.2.6 is called the

identity matrix,

and we will encounter such matrices again in future. Before proceeding,

we develop some algebraic properties of matrix-vector multiplication

that are used extensively throughout linear algebra.

Theorem :

Let and

be

matrices, and let

and

be

-vectors in

. Then:

.

for all scalars

.

.

Proof:

We prove (3); the other verifications are similar and are left as exercises. Let and

be given in terms of their columns. Since adding two matrices is the same as adding their columns, we have

If we write

Definition 2.5 gives

Theorem 2.2.2 allows matrix-vector computations to be carried out much as in ordinary arithmetic. For example, for any matrices

and

and any

-vectors

and

, we have:

We will use such manipulations throughout the book, often without mention.

No comments:

Post a Comment